Testing Search Forms

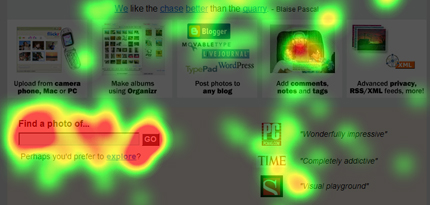

We began our tests by observing and analyzing users’ behavior when using search on these popular sites:

As I mentioned previously, we used Google during our training tests. This training test ensured that users could complete the subsequent tasks without any hesitation because of confusion about the task itself. During this very first test, the pattern I described previously first arose, highlighting the way rookie users interact with search: continually jumping from the input field to the submit button, as many as three times. There really are no intermediate users of Google, but the behavior of all pro users was characteristic of the way they’d interact with a well-known form. They just took one look to acquire the visual target—the search input field—typed the search string, then pressed Enter to submit the search—almost without looking at the submit button.

How We Tested

We held three different rounds of test sessions. In the sessions, each individual user evaluated the user interfaces we were testing in random order. During the first round of testing, we tested the usability of search forms. Each session started with a training task, through which our testers demonstrated the task that the user would repeat throughout the test session, using a form that was well known to all the users: Google™ search. Then, the user used other search forms to perform this task: locate the search box, then search for eye. The task ended when the user clicked the submit button.

Patterns in the Test Results

Even though some unanticipated results came to our attention during the tests, strong patterns emerged in the test results. These patterns arose from the way users’ different levels of expertise affect the way they look at forms. While rookies repeatedly scanned the form—both up and down and left to right, the pro users looked directly at what they knew were the tools they needed to complete their task.

Gaze plots showed the very different behaviors of rookies and pros when using search forms, as follows:

- As shown in Figure 1, the rookie user looked at the input field, then the submit button, then the label; then looked again at the input field, the submit button, and the label. Looping repeatedly through this pattern, even when typing the search string, she continued to look at all the form elements to assure herself she was doing the right thing.

- The pro user’s first fixation was directly on the input field. She didn’t look at anything else until she’d typed the text string and was ready to submit the search. Then, a long saccade moved the user’s focus to the submit button—which the user had perceived peripherally, but never looked at before—and, typically, pro users activated the submit button using the Enter key. In one case, we had the longest saccade ever recorded in our tests: nearly 600 pixels wide!

Rookies needed reassurance that their actions were correct, so while typing search strings, they continually checked whether the input field they were using was actually the search field. Though we first noticed this pattern while analyzing the use of search forms, we found that this pattern was also characteristic of novice users’ behavior during more complex tasks.

Practical advice to UX designers—Clearly label input fields. Doing so won’t bother pro users, but is a great help to your novice users.