Reading Is Not Natural and Is Easily Disrupted by Poor Design

The odds are that you are a fairly good reader. You learned to read as a child, you’ve read a great deal over your lifetime, and reading is a big part of your life. For you, reading feels automatic and natural. But reading text is no more natural to human beings than playing the violin, riding a bicycle, playing chess, or memorizing and reciting the lines of a play. Like those activities, reading is a skill—a cognitive one—that you must be taught and practice.

Learning to read differs from learning to speak and understand a first language. For young children, learning a first language is natural. They are wired to do it. Over hundreds of thousands—perhaps millions—of years, the human brain evolved the neural structures necessary to support spoken language. Certain areas of the young human brain are dedicated to learning language. As a result, normal humans are born with an innate ability to learn whatever language they are exposed to, without systematic training (Sousa, 2005).

In contrast, writing and reading did not exist until several thousand years ago and did not become common until four or five centuries ago—long after the human brain had evolved to its current state. The brains of young children show no special ability for learning to read. Like juggling or reading music, reading is an artificial skill we learn through systematic instruction and practice.

Because people are not innately wired to learn to read, children whose parents don’t read to them or who receive inadequate reading instruction in school may never learn to read well—if at all. As a result, there are many illiterate and quasiliterate people, especially in the developing world. In comparison, very few people never learn to speak a language.

Learning to read involves training our brain—including our visual system—to recognize patterns. Lines, contours, and shapes are basic visual features our brain recognizes innately. We don’t have to learn to recognize them. But we do have to learn to combine these basic visual features to perceive characters, letters, digits; morphemes, or units of meaning; words, phrases, and sentences.

To get a sense of what text looks like to someone who cannot read, look at a paragraph of text in a language and script you do not know—like that shown Figure 1, an Amharic script, which is used in Ethiopia.

Poor handwriting or presentation of printed text can reduce skilled readers’ reading speed and comprehension to levels similar to those of poor readers. For unskilled readers, poor text presentation can thwart their ability to read altogether. Design factors that can harm users’ ability to read text in a user interface include the following:

- uncommon or unfamiliar vocabulary—Words that are likely to present difficulties include rarely used words like bailiwick and penultimate, as well as computer-geek terms like authenticate and defragment.

- difficult scripts and typefaces—Elaborate typefaces are more difficult to read. For example, TEXT IN ALL CAPS is hard to read, especially when it’s also in a fancy font.

- tiny fonts—If text is too small for a user’s visual system to easily and accurately perceive its visual features, it will be hard to read, as this tiny text shows: Text in a tiny font.

- centered text—Centered text disrupts the systematic eye movements our eyes become trained to make when we learn to read, because it requires our eyes to constantly readjust their position, as you’ll experience when you try to read this centered text:

Centered text disrupts the systematic eye movements

our eyes become trained to make

when we learn to read,

because it requires our eyes

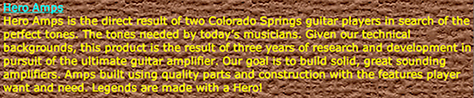

to constantly readjust their position. - backgrounds with inadequate contrast—Text on a background with inadequate value contrast is sometimes impossible to read.

- text on patterned backgrounds—Patterned backgrounds disrupt the recognition of visual features and patterns that is the essential skill for reading, as demonstrated by Figure 2.

Short-Term and Long-Term Memory Aren’t Separate Stores

Historically, cognitive psychologists have distinguished short-term memory from long-term memory. Short-term memory covers situations in which people retain information for very short intervals, typically ranging from a fraction of a second to several seconds, but perhaps as long as a minute. Long-term memory covers situations in which people retain information over longer periods such as hours, days, years, or even lifetimes.

In the 1970s, when I was in graduate school, psychologists were divided about whether short-term and long-term memory are separate functions that are mediated by different areas of the brain. Some claimed they are separate, pointing to the fact that damage to certain areas of the brain causes short-term memory deficits, but not long-term memory loss, and vice versa. Other psychologists claimed the brain has only one seat of memory that has different behavioral characteristics at different time scales. Today, we know that the latter theory is closer to being correct (Jonides et al., 2008).

An analogy may help here. We can think of long-term memory as a huge warehouse with items piled up—some recently arrived; others old and covered with dust. New items arrive through several doors—these correspond to our perceptual senses—and are temporarily illuminated by the light of the open doors, but quickly get pushed into the dark warehouse. On the ceiling are four spotlights, which move around the warehouse, lighting up certain items. Items are illuminated when they enter the warehouse or are hit by a spotlight, then glow for a short time thereafter. When one item glows, other items near it also glow briefly. Glowing items might attract a spotlight.

Short-term memory is what is in the spotlights. The items in it are the focus of our attention. It is not a place where memories and perceptions go for our brain to work on them.