Modern Industrial Society Has Made Our Retina’s Rods Obsolete

In college and graduate school, I learned the following about human vision:

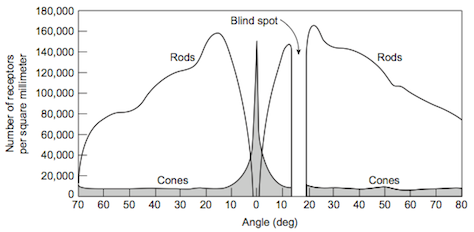

- The retina at the back of our eyes—the surface on which our eyes focus images—has two types of light-receptor cells: rods and cones.

- The rods detect light levels, but not colors, while the cones detect colors.

- There are three types of cones, which are sensitive to red, green, and blue light, respectively, suggesting that human color vision encodes colors as combinations of red, green, and blue pixels, similar to the way computers and digital cameras encode colors.

However, what I did not learn until recently—from Colin Ware’s book Visual Thinking for Design—is that people who live in today’s industrialized societies don’t use their retinal rods much.

The rods evolved to help humankind and our predecessors in the animal kingdom see in poorly illuminated environments—for example, at dusk, at dawn, during the night, or in dark caves. We all—humans and animals—spent much of our time in poor lighting until the nineteenth century, when electric lighting was invented and became widely used in the developed world. But our retina’s rods function only at low levels of light. Bright light—even normal daylight—saturates them, yielding a maxed-out signal that conveys no useful information.

Today, those of us living in the developed world rely on our rods only when we are doing things like having dinner by candlelight, feeling our way around our homes during a nighttime power outage, camping outside after dark, or going on a moonlit stroll. In bright daylight and artificially lighted environments

—where we spend most of our time—our rods are completely maxed out. Therefore, our visual perception usually comes entirely from our cones.

Many Animals See Colors

Even though Designing with the Mind in Mind is about the human mind, the reading I did to prepare for writing it taught me some things about animal vision as well. For example, I learned that much of what I thought I knew about animals’ color vision was incorrect.

Everything I had read about animal vision in my youth suggested that primates—lemurs, monkeys, apes, and humans—are the only animals that see colors. All other animals, I had thought, have only brightness-detector cells

—that is, rods—and, therefore, cannot distinguish different colors. Wrong!

It turns out that many different animals, ranging all across the animal kingdom, can see colors. However, this does not mean their color vision works the same way ours does or that they see the same colors we see. There is really no way to know what colors animals see, because color is not an objective property of the world, but rather an artifact of perception—something the brain constructs to distinguish different frequencies of light. Thus, saying that many animals can perceive color just means that they can distinguish different colors. Usually these are colors that are important to their survival. Some animals can even distinguish more colors than people can.

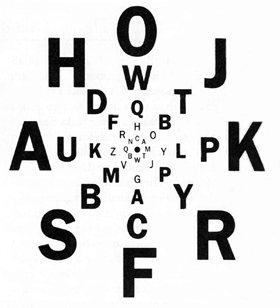

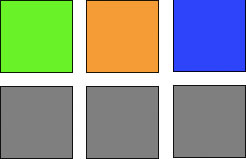

An ability to perceive—that is, distinguish—colors requires that an animal’s eyes have more than one type of light sensor, each of which is sensitive to different frequencies of light. If all of an animal’s light sensors are sensitive to the same range of light frequencies, the animal can distinguish only levels of brightness, not colors. Swatches of color that differ only in hue, like those in Figure 1, would appear the same to such an animal.

But if an animal has two or more different types of light-sensor cells, each sensitive to a different range of light frequencies, that animal probably can distinguish different colors. (I say probably because some animals behave as though they cannot distinguish colors, even though their eyes contain different types of light-sensor cells.) What may be surprising is that many different animals, from insects to mammals, have two or more types of light detectors.

Animals that can see colors include the following:

- Bees have three types of color detectors in their eyes, so they can distinguish different colors. Unlike ours, their light-detector cells are totally insensitive to low-frequency, or red, light, but are sensitive to ultraviolet light—higher light frequencies than human eyes can perceive. Therefore, bees can distinguish colors that we cannot and vice versa.

- Some butterflies have between four and six types of photoreceptors and, therefore, can distinguish many more colors than we can. This makes sense—a butterfly spends its life seeking out the flowers that have the most nectar.

- Many fish and birds have four types of photoreceptors, making them capable of distinguishing more colors than primates can distinguish.

- Dogs have two types of cones in their eyes. The sensitivities of their cones are very similar to those of humans who have red-green color-deficient vision. Therefore, don’t expect a dog to distinguish red from green very well.

Animals that cannot see colors include the following:

- Bulls cannot see the color of a matador’s red cape. Like all cattle, bulls have only rods in their eyes, so they cannot perceive color. In bullfights, bulls respond only to the movement of the matador’s cape. While the capes are red by tradition, they could just as well be purple, pink, or green. Imagine green matador capes!

- Guinea pigs have only rods—not cones—so they cannot see color.

- Owls have no cone cells, so they cannot see colors. In fact, many animals that are active mainly at night lack color vision. That makes evolutionary sense: they have little opportunity to use color vision.

- The owl monkeys of Central and South America, despite being primates, have only one type of retinal cell and, therefore, cannot see colors. The evolutionary reason may be similar to that for owls: a nocturnal lifestyle.

- Cats are an odd species: While their eyes have three types of cones, the proportion of cones relative to rods is very low in comparison to primates. Perhaps this is because of their being—at least in the wild—active mainly at night. Cats usually behave as though they are totally colorblind, but under certain conditions they can distinguish orange-red objects from blue-green objects.